Introduction to Autonomous Sensor Fusion

Autonomous sensor fusion is a critical component in the development and deployment of unmanned autonomous vehicles (UAVs). It involves the integration of data from multiple sensors to create a comprehensive and accurate perception of the environment surrounding the vehicle. This technology enables UAVs to navigate safely and efficiently in complex and dynamic environments, making them suitable for a wide range of applications, including transportation, logistics, surveillance, and exploration.

Types of Sensors Used in Autonomous Vehicles

UAVs rely on a variety of sensors to gather data about their surroundings. Some of the most common types of sensors used in autonomous vehicles include:

- Cameras: Visual sensors that capture images and videos of the environment.

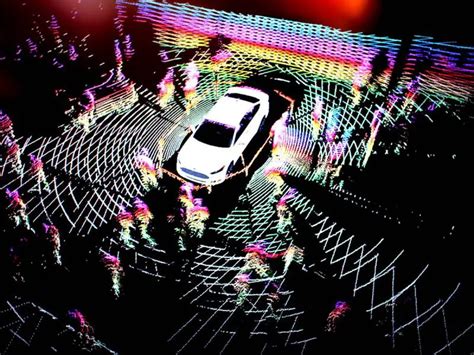

- LiDAR (Light Detection and Ranging): A remote sensing method that uses laser light to measure distances and create 3D maps of the surroundings.

- Radar (Radio Detection and Ranging): A system that uses radio waves to determine the range, angle, and velocity of objects.

- Ultrasonic sensors: Sensors that emit high-frequency sound waves to detect nearby objects and measure distances.

- GPS (Global Positioning System): A satellite-based navigation system that provides location and time information.

- IMU (Inertial Measurement Unit): A device that measures the vehicle’s acceleration, orientation, and angular velocity.

Sensor Fusion Techniques

Sensor fusion techniques are used to combine data from multiple sensors to create a more accurate and reliable representation of the environment. There are several approaches to sensor fusion, including:

- Kalman Filtering: A mathematical algorithm that estimates the state of a system based on noisy measurements from multiple sensors.

- Bayesian Inference: A probabilistic approach that updates the belief about the state of the environment based on new sensor observations.

- Dempster-Shafer Theory: A framework for combining evidence from multiple sources to make decisions under uncertainty.

- Deep Learning: A subset of machine learning that uses artificial neural networks to learn patterns and features from sensor data.

Advantages of Multiple Sensor Fusion in UAVs

Improved Accuracy and Reliability

One of the primary advantages of using multiple sensor fusion in UAVs is the improvement in accuracy and reliability of the vehicle’s perception system. By combining data from different sensors, the system can compensate for the limitations and uncertainties of individual sensors, resulting in a more robust and accurate understanding of the environment.

For example, cameras can provide detailed visual information but are affected by lighting conditions and lack depth perception. LiDAR, on the other hand, can accurately measure distances but has a limited field of view and can be affected by weather conditions. By fusing data from both cameras and LiDAR, the vehicle can create a more complete and reliable 3D map of its surroundings.

Redundancy and Fault Tolerance

Another benefit of multiple sensor fusion is the increased redundancy and fault tolerance of the perception system. If one sensor fails or provides erroneous data, the other sensors can still provide sufficient information for the vehicle to operate safely.

For instance, if the GPS signal is lost or corrupted, the vehicle can still rely on data from the IMU, cameras, and LiDAR to estimate its position and navigate through the environment. This redundancy ensures that the vehicle can continue to operate even in the presence of sensor failures or adverse conditions.

Enhanced Situational Awareness

Multiple sensor fusion also enables UAVs to have enhanced situational awareness, which is crucial for safe and efficient operation in complex environments. By combining data from different sensors, the vehicle can detect and track objects, classify them based on their characteristics, and predict their future behavior.

This enhanced situational awareness allows the vehicle to make informed decisions and react to changes in the environment, such as avoiding obstacles, following traffic rules, and interacting with other vehicles and pedestrians.

Challenges and Limitations of Multiple Sensor Fusion

Data Synchronization and Calibration

One of the main challenges in implementing multiple sensor fusion is the need for accurate data synchronization and calibration. The data from different sensors must be aligned in time and space to ensure that the fusion algorithm can correctly combine the information.

This requires precise time stamping of sensor data and careful calibration of the sensors’ intrinsic and extrinsic parameters. Any misalignment or inconsistency in the data can lead to errors in the fused output and degrade the performance of the perception system.

Computational Complexity and Real-Time Processing

Another challenge is the computational complexity and real-time processing requirements of multiple sensor fusion algorithms. As the number and diversity of sensors increase, the amount of data that needs to be processed grows exponentially.

This can pose significant challenges for the onboard computing resources of the UAV, which must process the data in real-time to enable timely decision-making and control. Efficient algorithms and hardware accelerators, such as GPUs and FPGAs, are often necessary to meet the computational demands of multiple sensor fusion.

Dealing with Uncertainty and Conflicting Information

Multiple sensor fusion algorithms must also be able to handle uncertainty and conflicting information from different sensors. Sensors can have varying levels of accuracy, noise, and reliability, and their measurements may not always agree with each other.

The fusion algorithm must be able to estimate the uncertainty associated with each sensor’s data and combine the information in a way that minimizes the overall uncertainty and resolves any conflicts. This requires sophisticated probabilistic models and decision-making strategies that can adapt to the changing conditions and characteristics of the sensors.

Applications of Multiple Sensor Fusion in UAVs

Autonomous Navigation and Mapping

One of the primary applications of multiple sensor fusion in UAVs is autonomous navigation and mapping. By combining data from cameras, LiDAR, radar, and other sensors, the vehicle can create a detailed 3D map of its environment and use it to plan and execute safe and efficient paths.

This enables UAVs to operate in GPS-denied environments, such as indoors or in urban canyons, and to navigate through complex and dynamic scenes, such as traffic or crowds. Autonomous navigation and mapping are crucial for a wide range of applications, including delivery services, search and rescue, and infrastructure inspection.

Object Detection and Tracking

Another important application of multiple sensor fusion is object detection and tracking. By fusing data from different sensors, the vehicle can detect and classify objects in its environment, such as vehicles, pedestrians, and obstacles, and track their motion over time.

This information is essential for safe and efficient operation in shared environments, where the UAV must avoid collisions and interact with other agents. Object detection and tracking also enable advanced applications, such as surveillance, target following, and gesture recognition.

Collaborative Sensing and Swarm Intelligence

Multiple sensor fusion can also enable collaborative sensing and swarm intelligence in multi-UAV systems. By sharing sensor data and fused information among multiple vehicles, the system can achieve a more comprehensive and accurate understanding of the environment and coordinate its actions to achieve common goals.

For example, a swarm of UAVs equipped with multiple sensors can collaboratively map a large area, search for targets, or transport goods in a distributed and efficient manner. Collaborative sensing and swarm intelligence can greatly enhance the capabilities and resilience of UAV systems and enable new applications in fields such as agriculture, environmental monitoring, and disaster response.

Future Directions and Research Challenges

Integration of Advanced Sensors and Fusion Algorithms

As sensor technology continues to advance, there is a growing opportunity to integrate new types of sensors and fusion algorithms into UAVs. For example, hyperspectral cameras, thermal imagers, and acoustic sensors can provide additional information about the environment and enable new applications in fields such as precision agriculture and gas leak detection.

Moreover, the development of advanced fusion algorithms, such as deep learning-based methods and probabilistic graphical models, can further improve the accuracy and robustness of the perception system and enable more sophisticated decision-making and control strategies.

Standardization and Interoperability

Another important research challenge is the standardization and interoperability of multiple sensor fusion systems in UAVs. As the number and diversity of sensors and fusion algorithms grow, there is a need for common standards and interfaces that allow different components to work together seamlessly and efficiently.

This requires the development of modular and scalable architectures, as well as the adoption of open-source software and hardware platforms that promote collaboration and innovation in the field.

Safety, Security, and Ethical Considerations

Finally, the development and deployment of UAVs with multiple sensor fusion capabilities raise important safety, security, and ethical considerations. The increased autonomy and situational awareness of these vehicles can pose risks to privacy, data protection, and public safety if not properly regulated and controlled.

Moreover, the use of UAVs for surveillance, targeted advertising, or other potentially harmful applications raises ethical concerns that must be addressed through responsible innovation and governance frameworks.

Frequently Asked Questions (FAQ)

-

Q: What is the difference between sensor fusion and sensor integration?

A: Sensor fusion involves the combination of data from multiple sensors to create a more accurate and reliable representation of the environment, while sensor integration refers to the physical and electrical integration of sensors into a system. -

Q: What are the most common sensors used in autonomous vehicles?

A: The most common sensors used in autonomous vehicles include cameras, LiDAR, radar, ultrasonic sensors, GPS, and IMU. -

Q: What are the main advantages of using multiple sensor fusion in UAVs?

A: The main advantages of using multiple sensor fusion in UAVs are improved accuracy and reliability, redundancy and fault tolerance, and enhanced situational awareness. -

Q: What are the challenges in implementing multiple sensor fusion in UAVs?

A: The main challenges in implementing multiple sensor fusion in UAVs include data synchronization and calibration, computational complexity and real-time processing, and dealing with uncertainty and conflicting information. -

Q: What are some of the future research directions in multiple sensor fusion for UAVs?

A: Some of the future research directions in multiple sensor fusion for UAVs include the integration of advanced sensors and fusion algorithms, standardization and interoperability, and addressing safety, security, and ethical considerations.

Conclusion

Multiple sensor fusion is a critical enabling technology for unmanned autonomous vehicles, providing improved accuracy, reliability, and situational awareness in complex and dynamic environments. By combining data from different sensors, UAVs can navigate safely and efficiently, detect and track objects, and collaborate with other vehicles to achieve common goals.

However, the implementation of multiple sensor fusion in UAVs also poses significant challenges, including data synchronization and calibration, computational complexity, and dealing with uncertainty and conflicting information. Moreover, the development and deployment of UAVs with multiple sensor fusion capabilities raise important safety, security, and ethical considerations that must be addressed through responsible innovation and governance frameworks.

As sensor technology and fusion algorithms continue to advance, there is a growing opportunity to integrate new types of sensors and fusion methods into UAVs and enable new applications in fields such as agriculture, environmental monitoring, and disaster response. However, this also requires the development of common standards and interfaces, as well as the adoption of open-source software and hardware platforms that promote collaboration and innovation in the field.

In conclusion, multiple sensor fusion is a key technology for the future of unmanned autonomous vehicles, offering significant benefits but also posing important challenges and considerations. Continued research and development in this field will be essential to realize the full potential of UAVs and enable their safe and effective deployment in a wide range of applications.

No responses yet