Introduction to Edge-Cloud Hardware

In recent years, the rapid growth of Internet of Things (IoT) devices and the increasing demand for real-time data processing have led to the emergence of edge computing. Edge computing brings computation and data storage closer to the source of data, reducing latency and improving performance. However, cloud computing remains a crucial component of modern computing infrastructure, offering scalability, flexibility, and cost-effectiveness. This article explores the hardware design considerations for edge computing and cloud computing, highlighting their differences and the factors that influence the choice between the two.

Edge Computing Hardware Requirements

Low Latency and Real-Time Processing

One of the primary drivers for edge computing is the need for low latency and real-time processing. Edge devices must be capable of quickly processing data and making decisions without relying on a remote server. To achieve this, edge hardware must have sufficient computing power and memory to handle the required workload. Some key considerations for edge hardware include:

- Processor: Edge devices often utilize low-power processors, such as ARM-based processors, to balance performance and energy efficiency. Examples include the Raspberry Pi, NVIDIA Jetson series, and Intel Atom processors.

- Memory: Edge devices require sufficient memory to store and process data locally. The amount of memory depends on the specific application and the volume of data being processed.

- Storage: Edge devices may need local storage to cache data or store intermediate results. Solid-state drives (SSDs) or eMMC storage are commonly used due to their fast read/write speeds and durability.

Power Efficiency and Thermal Management

Edge devices are often deployed in remote or resource-constrained environments, making power efficiency and thermal management crucial considerations. Edge hardware must be designed to operate within the available power budget and dissipate heat effectively. Some strategies for improving power efficiency and thermal management include:

- Low-power components: Selecting components with low power consumption, such as low-voltage processors and memory modules, can help reduce the overall power requirements of edge devices.

- Passive cooling: Passive cooling techniques, such as heat sinks and thermal pads, can dissipate heat without the need for active cooling systems, reducing power consumption and increasing reliability.

- Power management: Implementing power management techniques, such as dynamic voltage and frequency scaling (DVFS) and sleep modes, can help optimize power consumption based on the workload.

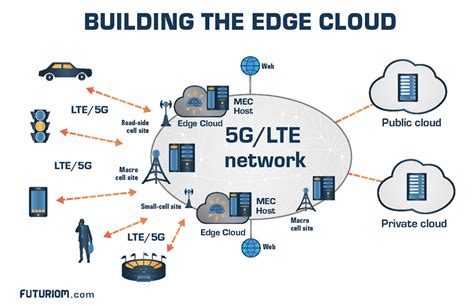

Connectivity and Communication

Edge devices often need to communicate with other devices, sensors, or the cloud. Connectivity and communication are essential considerations for edge hardware design. Some common connectivity options for edge devices include:

- Wireless: Wireless technologies, such as Wi-Fi, Bluetooth, and cellular networks (e.g., 4G/5G), enable edge devices to communicate wirelessly with other devices or the cloud.

- Wired: Wired connections, such as Ethernet or USB, provide reliable and high-bandwidth communication between edge devices and other components of the system.

- Protocols: Edge devices must support relevant communication protocols, such as MQTT, CoAP, or HTTP, to enable seamless data exchange and interoperability with other devices and systems.

Cloud Computing Hardware Requirements

Scalability and Elasticity

Cloud computing infrastructure must be designed to handle varying workloads and scale resources dynamically based on demand. Scalability and elasticity are key considerations for cloud hardware design. Some factors that enable scalability and elasticity include:

- Virtualization: Virtualization technologies, such as virtual machines (VMs) and containers, allow multiple applications or services to run on the same physical hardware, enabling efficient resource utilization and scalability.

- Load balancing: Load balancing techniques distribute workloads across multiple servers or instances to ensure optimal performance and resource utilization. Hardware load balancers or software-based solutions can be used to achieve load balancing.

- Auto-scaling: Auto-scaling mechanisms automatically adjust the number of instances or resources based on the workload demand, ensuring that the system can handle peak loads without overprovisioning.

High-Performance Computing

Cloud computing infrastructure often needs to support high-performance computing (HPC) workloads, such as scientific simulations, machine learning, and data analytics. HPC hardware requirements include:

- Processors: Cloud servers typically use high-performance processors, such as Intel Xeon or AMD EPYC, to handle compute-intensive workloads. These processors offer multiple cores and high clock speeds to deliver the necessary computing power.

- Accelerators: Accelerators, such as graphics processing units (GPUs) or field-programmable gate arrays (FPGAs), can significantly boost the performance of specific workloads, such as machine learning or video processing.

- Interconnect: High-speed interconnects, such as InfiniBand or 100 Gigabit Ethernet, enable fast communication between servers and storage systems, reducing latency and increasing throughput.

Data Storage and Durability

Cloud computing relies on robust and scalable storage solutions to store and manage large volumes of data. Data storage and durability are critical considerations for cloud hardware design. Some key aspects include:

- Redundancy: Cloud storage systems employ redundancy techniques, such as replication or erasure coding, to ensure data durability and availability. Multiple copies of data are stored across different servers or data centers to protect against hardware failures or disasters.

- Tiered storage: Cloud providers often offer different storage tiers, such as object storage, block storage, or file storage, to cater to various performance and cost requirements. Tiered storage allows users to select the appropriate storage solution based on their needs.

- Distributed file systems: Distributed file systems, such as Hadoop Distributed File System (HDFS) or Ceph, enable scalable and fault-tolerant storage by distributing data across multiple nodes in a cluster.

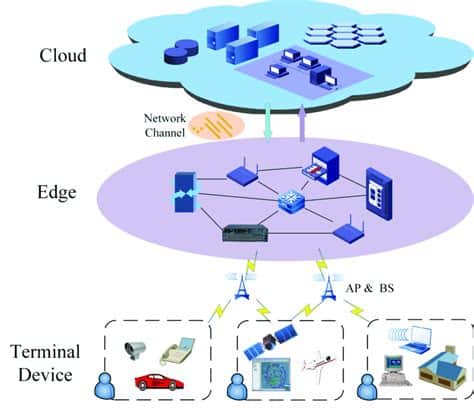

Edge-Cloud collaboration

While edge computing and cloud computing have distinct hardware requirements, they are not mutually exclusive. In many scenarios, edge devices and cloud infrastructure work together to deliver optimal performance and functionality. Edge-cloud collaboration involves leveraging the strengths of both edge and cloud computing to create a hybrid solution. Some aspects of edge-cloud collaboration include:

- Data preprocessing: Edge devices can preprocess and filter data before sending it to the cloud, reducing the amount of data transferred and the processing burden on the cloud infrastructure.

- Workload offloading: Computationally intensive tasks that exceed the capabilities of edge devices can be offloaded to the cloud for processing. The cloud can perform complex analytics or machine learning tasks and send the results back to the edge devices.

- Data aggregation: Edge devices can aggregate data from multiple sources before sending it to the cloud, reducing the frequency of data transmission and minimizing network bandwidth usage.

- Cloud-based management: Cloud platforms can be used to manage and monitor edge devices, enabling centralized control, software updates, and remote troubleshooting.

Hardware Design Considerations for Edge-Cloud Collaboration

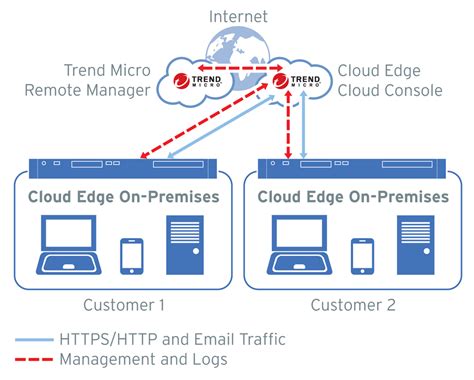

When designing hardware for edge-cloud collaboration, several factors need to be considered to ensure seamless integration and optimal performance. Some key considerations include:

- Interoperability: Edge devices and cloud infrastructure must be compatible and able to communicate effectively. Standardized protocols and APIs should be used to enable seamless data exchange and control between the edge and the cloud.

- Security: Edge-cloud collaboration introduces additional security challenges, as data traverses between the edge and the cloud. Hardware-based security features, such as secure boot, trusted execution environments (TEEs), and encryption accelerators, can help protect data and ensure secure communication.

- Bandwidth optimization: Edge devices should be designed to minimize the amount of data transmitted to the cloud, conserving network bandwidth and reducing latency. Techniques such as data compression, filtering, and aggregation can help optimize bandwidth usage.

- Fault tolerance: Edge devices should be designed to handle network disruptions or cloud outages gracefully. Local processing capabilities and data caching mechanisms can enable edge devices to continue operating independently when the connection to the cloud is interrupted.

Comparison Table: Edge Computing vs Cloud Computing Hardware

| Aspect | Edge Computing Hardware | Cloud Computing Hardware |

|---|---|---|

| Computing Power | Low to moderate, sufficient for local processing | High, capable of handling complex workloads |

| Memory | Limited, optimized for local data processing | Abundant, scalable based on demand |

| Storage | Local storage for caching and intermediate results | Scalable and durable storage solutions |

| Power Efficiency | Critical, designed for low power consumption | Less critical, focus on performance |

| Thermal Management | Passive cooling, heat dissipation in constrained environments | Active cooling, data center-level thermal management |

| Connectivity | Wireless and wired, support for IoT protocols | High-speed interconnects, support for cloud APIs |

| Scalability | Limited, designed for specific workloads | Highly scalable, able to handle varying workloads |

| Elasticity | Not a primary focus, fixed resource allocation | Essential, dynamic resource allocation based on demand |

| High-Performance Computing | Limited, focus on real-time processing | Supported, using high-performance processors and accelerators |

| Data Storage and Durability | Local storage, limited durability | Redundant and durable storage solutions |

Frequently Asked Questions (FAQ)

-

What is the main difference between edge computing and cloud computing hardware?

The main difference lies in their purpose and deployment. Edge computing hardware is designed for low-latency, real-time processing at the source of data, while cloud computing hardware focuses on scalability, elasticity, and handling complex workloads in a centralized manner. -

Can edge devices function independently without a connection to the cloud?

Yes, edge devices are designed to have sufficient computing power and storage to perform local processing and decision-making independently. However, for more complex tasks or long-term data storage, collaboration with the cloud is often necessary. -

What are the benefits of edge-cloud collaboration?

Edge-cloud collaboration combines the strengths of both edge and cloud computing. It enables real-time processing at the edge while leveraging the scalability and advanced processing capabilities of the cloud. This collaboration optimizes performance, reduces latency, and efficiently utilizes network bandwidth. -

How does hardware design affect power efficiency in edge computing?

Edge devices often operate in resource-constrained environments, making power efficiency crucial. Hardware design for edge computing focuses on low-power components, passive cooling techniques, and power management strategies to minimize power consumption while maintaining performance. -

What role do accelerators play in cloud computing hardware?

Accelerators, such as GPUs and FPGAs, are used in cloud computing hardware to boost the performance of specific workloads. They are particularly useful for compute-intensive tasks like machine learning, scientific simulations, and video processing, enabling faster execution and improved efficiency.

Conclusion

Hardware design for edge computing and cloud computing differs based on their specific requirements and deployment scenarios. Edge computing hardware prioritizes low latency, real-time processing, power efficiency, and connectivity, while cloud computing hardware focuses on scalability, elasticity, high-performance computing, and data storage durability.

However, the true potential lies in the collaboration between edge and cloud computing. By leveraging the strengths of both, organizations can create hybrid solutions that deliver optimal performance, flexibility, and cost-effectiveness. Edge devices can preprocess and filter data, while the cloud can handle complex analytics and provide centralized management.

When designing hardware for edge-cloud collaboration, considerations such as interoperability, security, bandwidth optimization, and fault tolerance become crucial. By addressing these aspects, hardware designers can create seamless and efficient edge-cloud systems that cater to the evolving needs of modern computing.

As the demand for real-time data processing and IoT applications continues to grow, the importance of edge-cloud hardware will only increase. By understanding the unique requirements and design considerations for edge computing and cloud computing hardware, organizations can make informed decisions and deploy the most suitable solutions for their specific use cases.

No responses yet